Face Detection using Python.

Face detection can be regarded as a specific case of object-class detection. In object-class detection, the task is to find the locations and sizes of all objects in an image that belongs to a given class. Examples include upper torsos, pedestrians, and cars.

Face-detection algorithms focus on the detection of frontal human faces. It is analogous to image detection in which the image of a person is matched bit by bit. Image matches with the image stored in the database. Any facial feature changes in the database will invalidate the matching process.

A reliable face-detection approach based on the genetic algorithm and the eigen-face[3] technique:[4]

Firstly, the possible human eye regions are detected by testing all the valley regions in the gray-level image. Then the genetic algorithm is used to generate all the possible face regions which include the eyebrows, the iris, the nostril, and the mouth corners.

Each possible face candidate is normalized to reduce both the lighting effect, which is caused by uneven illumination; and the shirring effect, which is due to head movement. The fitness value of each candidate is measured based on its projection on the Eigenfaces. After a number of iterations, all the face candidates with a high fitness value are selected for further verification. At this stage, the face symmetry is measured and the existence of the different facial features is verified for each face candidate.

#import librariesimport cv2 import numpy as np#import classifier for face and eye detection face_classifier = cv2.CascadeClassifier(‘Haarcascades/haarcascade_frontalface_default.xml’)# Import Classifier for Face and Eye Detectionface_classifier = cv2.CascadeClassifier(‘Haarcascades/haarcascade_frontalface_default.xml’) eye_classifier = cv2.CascadeClassifier (‘Haarcascades/haarcascade_eye.xml’) def face_detector (img, size=0.5):# Convert Image to Grayscalegray = cv2.cvtColor (img, cv2.COLOR_BGR2GRAY) faces = face_classifier.detectMultiScale (gray, 1.3, 5) If faces is (): return img# Given coordinates to detect face and eyes location from ROIfor (x, y, w, h) in faces x = x — 100 w = w + 100 y = y — 100 h = h + 100 cv2.rectangle (img, (x, y), (x+w, y+h), (255, 0, 0), 2) roi_gray = gray[y: y+h, x: x+w] roi_color = img[y: y+h, x: x+w] eyes = eye_classifier.detectMultiScale (roi_gray) for (ex, ey, ew, eh) in eyes: cv2.rectangle(roi_color,(ex,ey),(ex+ew,ey+eh),(0,0,255),2) roi_color = cv2.flip (roi_color, 1) return roi_color# Webcam setup for Face Detectioncap = cv2.VideoCapture (0) while True: ret, frame = cap.read () cv2.imshow (‘Our Face Extractor’, face_detector (frame)) if cv2.waitKey (1) == 13: #13 is the Enter Key break# When everything done, release the capturecap.release () cv2.destroyAllWindows ()

How the Face Detection Works

There are many techniques to detect faces, with the help of these techniques, we can identify faces with higher accuracy. These techniques have an almost same procedure for Face Detection such as OpenCV, Neural Networks, Matlab, etc. The face detection work as to detect multiple faces in an image. Here we work on OpenCV for Face Detection, and there are some steps that how face detection operates, which are as follows-

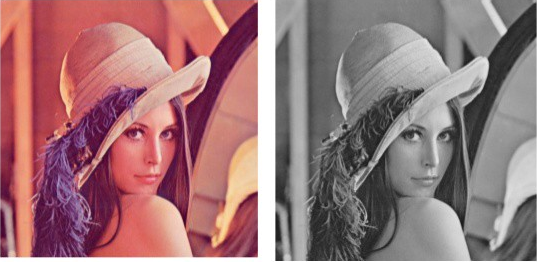

Firstly the image is imported by providing the location of the image. Then the picture is transformed from RGB to Grayscale because it is easy to detect faces in the grayscale.

After that, the image manipulation used, in which the resizing, cropping, blurring and sharpening of the images done if needed. The next step is image segmentation, which is used for contour detection or segments the multiple objects in a single image so that the classifier can quickly detect the objects and faces in the picture.

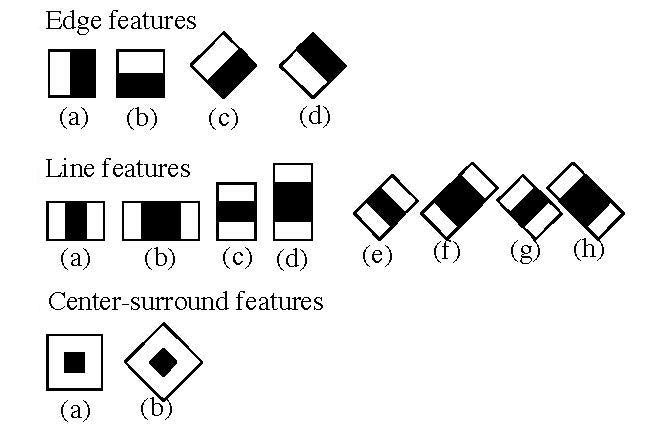

The next step is to use Haar-Like features algorithm, which is proposed by Voila and Jones for face detection. This algorithm used for finding the location of the human faces in a frame or image. All human faces shares some universal properties of the human face like the eyes region is darker than its neighbour pixels and nose region is brighter than eye region.

The haar-like algorithm is also used for feature selection or feature extraction for an object in an image, with the help of edge detection, line detection, centre detection for detecting eyes, nose, mouth, etc. in the picture. It is used to select the essential features in an image and extract these features for face detection.

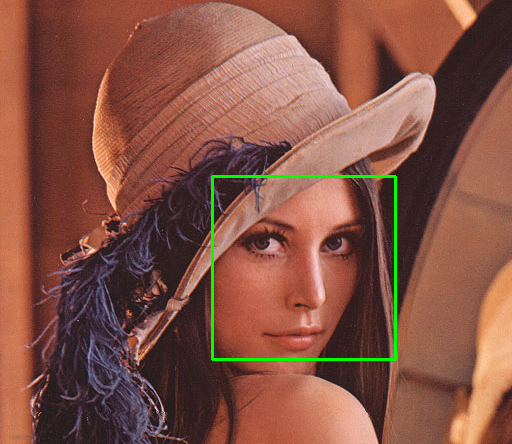

The next step is to give the coordinates of x, y, w, h which makes a rectangle box in the picture to show the location of the face or we can say that to show the region of interest in the image. After this, it can make a rectangle box in the area of interest where it detects the face. There are also many other detection techniques that are used together for detection such as smile detection, eye detection, blink detection, etc.

No comments:

Post a Comment